ライトアンプリフィケーション

この項目「ライトアンプリフィケーション」は途中まで翻訳されたものです。(原文:英語版 "Write amplification" 09:34, 19 December 2012 (UTC)) 翻訳作業に協力して下さる方を求めています。ノートページや履歴、翻訳のガイドラインも参照してください。要約欄への翻訳情報の記入をお忘れなく。(2013年2月) |

ライトアンプリフィケーション(書き込みの増幅) (WA:Write Amplification) は、フラッシュメモリやソリッドステートドライブ(SSD)において、実際に書き込んだデータの量よりも、フラッシュチップに対する書込量が増加すると言う、好まざる現象の事を言う。

フラッシュメモリに再書き込みを行うためには前もってその領域の消去が必要である。そのためにはデータやメタデータの移動や再書き込みが一度以上必要となる。 この動作増加作用は書込回数を増やし、SSDが信頼性を持って動作することのできる期間を短縮する。 書込回数増加はまたフラッシュメモリへの帯域を消費し、SSDの主にランダムライトの性能を低下させる。[1][2]多くの要因がSSDのライトアンプリフィケーションに影響する。一部はユーザーによるコントロールが可能であり、一部は書き込まれるデータやSSDの使用法そのものが原因となる。

インテルとシリコンシステムズ(ウェスタンデジタルが2009年に買収)は2008年迄には論文や出版物にライトアンプリフィケーションという単語を使用していた。ライトアンプリフィケーション値(WA値)は普通ホストよりの書込データ量とフラッシュメモリへ渡される書込データ量の比によって計られ、圧縮無しにはWA値は1を下回ることはない。サンドフォースは圧縮を用いることで通常のWA値0.5、SF-2281コントローラーを使用し最善の状況で0.14を達成したと主張した。[3]

SSDにおける基本的処理[編集]

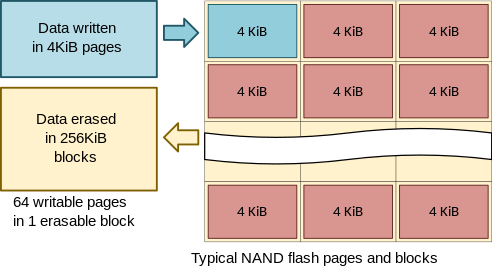

フラッシュメモリの特質として、メモリにあるデータを直接「書き換える」こと(オーバーライト)が原理上不可能であり、これはハードディスクドライブとは異なっている。SSDにデータが書き込まれるとき、該当するフラッシュメモリのセルはすべて「消去」されており、そのようなページ(通常サイズは4KiB)に対して一度にデータが書き込まれる。

SSD内に存在する、フラッシュメモリとインターフェースをホストシステムとともに管理するSSDコントローラはフラッシュトランスレーションレイヤー(FTL)に付随するロジカルブロックアドレッシング(LBA)と呼ばれる方法を使用する。

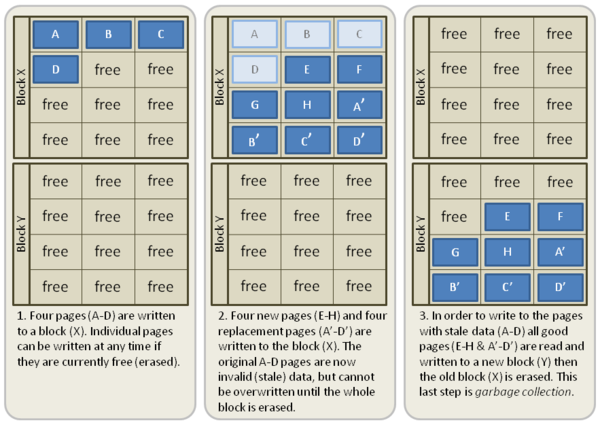

新しいデータが既に書き込まれた古いデータと置き換わって入ってくるとSSDコントローラは新しいデータを新しい場所に書き込み、新しい物理的な場所を指すように論理マッピングを更新する。古い場所にあるデータは既に有効ではないため、その場所を再び書き込む前に消去する必要がある。[1][5]

フラッシュメモリは、限られた回数しかプログラム・削除できない。これは、フラッシュメモリの寿命を通じて維持できる最大プログラム/消去サイクル数 (P/E サイクル) と呼ばれることがある。シングルレベルセル (SLC) フラッシュは、より高い性能とより長い耐久性を目指して設計されており、通常、5万~10万サイクルの間で動作させることができる。2011年現在、マルチレベル・セル(MLC)フラッシュは低コスト・アプリケーション向けに設計されており、サイクル数は通常3,000~5,000と大幅に削減されている。書き込み増幅率が低ければ低いほど、フラッシュメモリのP/Eサイクルが減少し、SSDの寿命が延びるため、より望ましいとされている。[1]

WA値の計算[編集]

ライト・アンプリフィケーション(WA)は、その用語が定義されるよりも以前から知られていた事実であるが、2008年にはインテル[6][7]とシリコンシステムズが、この用語を資料や広報に使い始めた。[8]

SSDはWA値を持ち、それは次のような式で表される。[1][9][10][11]

あるSSDにおいてWA値を正確に測定するためには、ドライブが「安定状態」に達するために十分な時間、テスト書込が行われるべきである。[2]

WA値の計算式

WA…WA値

NAND…フラッシュメモリへの書込データ量

HOST…ホスト側からの書込データ量

WA値に影響を及ぼす要素[編集]

多くの要素が、SSDのWA値に影響を及ぼす。次の表は、その主要要素がどのように影響するかを列挙している。変量的要素については、表において「比例」または「反比例」の関係を示している。例えばオーバー・プロビジョニングが増大すれば、WA値が減少する(反比例の関係)。要素が二値的(有効または無効)の場合、「正」または「負」の関係を示す。[1][12][9]

| 要素 | 詳細 | タイプ | 関係* |

|---|---|---|---|

| ガベージコレクション | 次に消去し書きなおすために最も適したブロックを選出するアルゴリズムの効率 | 変量 | 反比例 (good) |

| オーバー・プロビジョニング | SSDコントローラーに割り当てられた予備領域(ユーザー領域外)の物理的容量の割合 | 変量 | 反比例 (good) |

| TRIM | ガベージコレクションの際にSSDコントローラーにどのデータを破棄可能か通知するSATAコマンド | 二値 | 正 (good) |

| ユーザー空き領域 | ユーザー領域のうち、実データが無い空き領域の割合。これが有効な要素となるにはTRIMコマンドが必須。 | 変量 | 反比例 (good) |

| Secure Erase | 全てのユーザーデータおよび制御用のメタデータを消去し、SSDを工場出荷時のパフォーマンスにリセットする。ガベージコレクションが再開されるまで有効。 | 二値 | 正 (good) |

| ウェアレベリング | 全ブロックの書換回数を可能な限り平均化するためのアルゴリズムの効率 | 変量 | 比例 (bad) |

| 静的データと動的データの分離 | データをその変更頻度によりグループ分けする | 二値 | 正 (good) |

| シーケンシャルライト | 理論上はシーケンシャル書込はWA値が1となるが、他の要素によりWA値は変動する。 | 二値 | 正 (good) |

| ランダムライト | 連続しない複数の論理ブロックアドレスへの書き込みは、WA値に最も大きい影響がある。 | 二値 | 負 (bad) |

| データ圧縮とデータ冗長性の削減 | フラッシュメモリーに書き込まれる前に、除去された冗長性のあるデータの量 | 変量 | 反比例 (good) |

| 関係 | 詳細 |

|---|---|

| 比例 (bad) | 要素が増大するとWA値が増大 |

| 反比例 (good) | 要素が増大するとWA値が減少 |

| 正 (good) | 要素が有効な場合にWA値が減少 |

| 負 (bad) | 要素が有効な場合にWA値が増大 |

上記の要素のほか、リード・ディスターブ(en:Read_disturb)などの不良モード管理[13]もWA値に影響を及ぼす可能性がある(→#SSDにおけるガベージコレクション(GC))。

なお、SSDに対するデフラグメンテーション処理については、「SSDでのデフラグ」を参照。

SSDにおけるガベージコレクション(Garbage Collection)[編集]

データは、複数の記憶セルで構成される「ページ」と言う単位でフラッシュメモリーに書き込まれる。しかし消去は、複数のページで構成される、より大きい「ブロック」と言う単位でのみ可能となる[4]。もしあるブロック内のあるページのデータが不要になった場合("stale"ページと呼ばれる)、そのブロック内の必要なデータがあるページのみ、他の消去済みブロックに転送(書込)される。[2] そして"stale"ページは転送(書込)されないため、転送先のブロックでフリーなページとして新しい別のデータを書き込むことができる。ここまでの処理を「ガベージコレクション(以下GC)」と呼ぶ[1][10]。全てのSSDは何らかのGCの仕組みを備えているが、GCをいつ、どのように処理するかはそれぞれ異なっている[10]。GCはSSDのライト・アンプリフィケーションに大きな影響がある[1][10]。

読み込み動作については、フラッシュメモリーを消去する必要はないため、通常はライトアンプリフィケーションに関連付けられることはない。しかしリード・ディスターブ(en:Read_disturb)などの不良モード[13]が発生する前に、そのブロックは書き直しが行われる。もっとも、この事は、ドライブのライトアンプリフィケーション対する実質的な影響度は低いと見られている[14]。

バックグラウンド・ガベージコレクション (BGC, Background Garbage Collection)[編集]

GCのプロセスはフラッシュメモリーの読込と再書込動作を伴う。つまりホストからの新しい書込指令によって、1ブロック全体の読込と、そのブロックのうち有効なデータを含む部分の書込、そして新しいデータの書込がまず必要となる。これはシステムの性能を著しく低減させる。[15]

SSDのコントローラーには「バックグラウンドGC(BGC)」、「アイドル時GC(ITGC)」などと呼ばれる機能を備えるものがある。これは、コントローラーがSSDのアイドル時に、ホスト側から新しい書込データが来るよりも前に、フラッシュメモリーの複数のブロックを統合するものである。これによりデバイスの性能の低下を防ぐ事ができる。[16]

もしSSDコントローラが、必要となる前に予備ブロックをすべてバックグラウンドでGCしていれば、ホストから書き込まれる新しいデータは事前に元々あったデータを移動する必要がなく、性能を最高のスピードで動作させることができる。

そのトレードオフとして、それらのデータブロックの中には、実はホストが必要とせず、”いずれ削除されるもの”もあるのだが、OSはいずれ削除されるデータもあるということをSSDコントローラには伝えなかった場合、”すぐに削除されるデータ”は、フラッシュメモリ内の別の場所に書き直され、書き込み増幅率が上昇してしまう。これに対しOCZが販売する一部のSSDでは、BGCが少数のブロックのみを削除して停止するため、過剰な書き込みの量が抑えられる。[10]

もう一つの解決策として、ホストからの書き込みと並行して必要な移動を実行できる、効率的なGCシステムを持つことが挙げられる。この方法は、SSDがほとんどアイドル状態でない[17]、書き込み量の多い環境においてより効果的であり、SandForce SSDコントローラ[15]やViolin Memoryのシステム[9]には、この機能が搭載されている。

ファイルシステムに着目したGC[編集]

→ 2010年、一部のメーカー(特にSamsung)は、BGCの概念を拡張してSSD上で使用されているファイルシステムを分析し、最近削除されたファイルや未パーティションの領域を特定するSSDコントローラを発表した。メーカーは、これにより、TRIMをサポートしていないシステム(OSやSATAコントローラのハードウェア)でも、同様の性能を達成できると主張している。Samsungの実装の動作は、NTFSファイルシステムを前提とし、それを必要とするように思えた。[18]だが現在出荷されているこれらのメーカーのSSDで、この機能がまだ利用可能かどうかは不明である。また、これらのドライブで、MBRとNTFSを使用して適切にフォーマットされていない場合、体系的なデータ破損が報告されている。[19]

オーバー・プロビジョニング[編集]

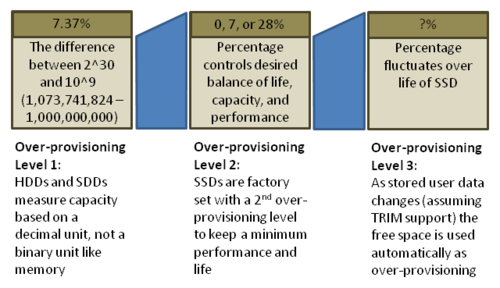

オーバー・プロビジョニング (以下OP) (en:Over Provisioning) はフラッシュメモリの物理的な容量とOSを通じてユーザーが利用可能なものとして提示される論理容量との差のことを表す。

また、SSDでのGC、ウェアレベリング、および不良ブロックマッピング操作の間、OPによる追加スペースは、コントローラがメモリに書き込む際のWAを低減するのに役立つ。

OPの第一段階は、容量の計算と、実際にはギビバイト(GiB)とするところをギガバイト(GB)と言う単位を使っていることに起因する。

HDDやSSDの販売者は、GBという用語を10進数のGB、または1,000,000,000 (109)バイトを表すのに使用しているが、フラッシュメモリ(他のほとんどの電子記憶装置と同様)は2の累乗で組み立てられるため、SSDの物理的な容量を計算するには2進数GBあたり1,073,741,824 (230) に基づく。

この2つの値の差は、7.37% (=(230-109)/109)である。

したがって、オーバープロビジョニングが0%の128GB SSDは128,000,000,000バイトをユーザーに提供することとなる。

また、この7.37%は通常、OPの合計数にはカウントされない。[20][21]

OPの第二段階は製造業者によるもので、このOPレベルは通常、物理容量の10進数GBとユーザーが利用可能なスペースの10進数GBの左に基づき、0%, 7%, または28%である。

一例として、メーカーがSSDの仕様を128GBの可能領域に基づき、100GB, 120GB, 128GBと公表しているケースがある。

この差はそれぞれ28%, 7%, 0%であり、メーカーが自社のドライブに28%のOPがあると主張する根拠となる。

これは、10進数のGBと2進数のGBの違いから利用可能となる追加容量7.37%をカウントしていないものである。[20][21]

OPの第三段階はエンド・ユーザーが容量を犠牲にする代わりに耐久性と性能を得るために行うものである。

一部のSSDではエンドユーザーがOPを選択できるユーティリティーが提供されている。さらに、利用可能スペースの100%よりも小さいOSパーティションでSSDがセットアップされている場合、そのパーティション未設定のスペースもSSDにより自動的にOPとして使用される。

OPはユーザー容量を奪うが、WAの減少、耐久性の向上、そしてパフォーマンスの向上をもたらすこととなる。

オーバープロビジョニングの計算

TRIMコマンド[編集]

TRIMコマンドはSATAコマンドであり、ファイルの削除やフォーマットコマンドの使用により以前に保存されたデータのどのブロックが不要になったかをオペレーティングシステムがSSDに伝えることができるものである。ファイルの上書きのようにOSによりロジカルブロックアドレッシング(LBA)が置き換えられると、SSDは元のLBAが古くなった、または無効であるとマークされる可能性があることを認識し、GC中にそれらのブロックを保存することはない。

また、ユーザーまたはOSがファイルを消去する場合(ファイルの一部を削除するに留まらず)、ファイルは通常、削除されたとマークが付けられるが、ディスク上に存在するコンテンツは削除されるわけではない。このため、SSDはファイルが以前占有していたLBAが消去可能であるということを感知できず、SSDはそれらのLBAをGCし続けようとしてしまう。[22][23][24]

TRIMコマンドの導入により、Windows 7, MacOS(最新リリースのSnow Leopard, Lion, そしてMountain Lion, 一部パッチ適用済み)やLinux 2.6.33以降などのOSは上記の問題が解決された。

ファイルが完全に削除されたりドライブがフォーマットされたりすると、OSは有効なデータが含まれなくなったLBAとともにTRIMコマンドを送信、これによりSSDには使用中のLBAは削除し、再利用することができると通知される。

この動作により、GC中に移動するLBAの量が減少し、SSDにはより多くの空き容量ができる。また、その結果SSDでは書き込み増幅が少なくなり、パフォーマンスが向上することにつながる。[22][23][24]

TRIMの制限と限界[編集]

The TRIM command also needs the support of the SSD. If the firmware in the SSD does not have support for the TRIM command, the LBAs received with the TRIM command will not be marked as invalid and the drive will continue to garbage collect the data assuming it is still valid. Only when the OS saves new data into those LBAs will the SSD know to mark the original LBA as invalid.[24] SSD Manufacturers that did not originally build TRIM support into their drives can either offer a firmware upgrade to the user, or provide a separate utility that extracts the information on the invalid data from the OS and separately TRIMs the SSD. The benefit would only be realized after each run of that utility by the user. The user could set up that utility to run periodically in the background as an automatically scheduled task.[15]

Just because an SSD supports the TRIM command does not necessarily mean it will be able to perform at top speed immediately after. The space which is freed up after the TRIM command may be random locations spread throughout the SSD. It will take a number of passes of writing data and garbage collecting before those spaces are consolidated to show improved performance.[24]

Even after the OS and SSD are configured to support the TRIM command, other conditions will prevent any benefit from TRIM. As of early 2010[update], databases and RAID systems are not yet TRIM-aware and consequently will not know how to pass that information on to the SSD. In those cases the SSD will continue to save and garbage collect those blocks until the OS uses those LBAs for new writes.[24]

The actual benefit of the TRIM command depends upon the free user space on the SSD. If the user capacity on the SSD was 100 GB and the user actually saved 95 GB of data to the drive, any TRIM operation would not add more than 5 GB of free space for garbage collection and wear leveling. In those situations, increasing the amount of over-provisioning by 5 GB would allow the SSD to have more consistent performance because it would always have the additional 5 GB of additional free space without having to wait for the TRIM command to come from the OS.[24]

ユーザー空き領域[編集]

The SSD controller will use any free blocks on the SSD for garbage collection and wear leveling. The portion of the user capacity which is free from user data (either already TRIMed or never written in the first place) will look the same as over-provisioning space (until the user saves new data to the SSD). If the user only saves data consuming 1/2 of the total user capacity of the drive, the other half of the user capacity will look like additional over-provisioning (as long as the TRIM command is supported in the system).[24][25]

Secure erase[編集]

The ATA Secure Erase command is designed to remove all user data from a drive. With an SSD without integrated encryption, this command will put the drive back to its original out-of-box state. This will initially restore its performance to the highest possible level and the best (lowest number) possible write amplification, but as soon as the drive starts garbage collecting again the performance and write amplification will start returning to the former levels.[26][27] Many tools use the ATA Secure Erase command to reset the drive and provide a user interface as well. One free tool that is commonly referenced in the industry is called HDDErase.[27][28] Parted Magic provides a free bootable Linux system of disk utilities including secure erase.[29]

Drives which encrypt all writes on the fly can implement ATA Secure Erase in another way. They simply zeroize and generate a new random encryption key each time a secure erase is done. In this way the old data cannot be read anymore, as it cannot be decrypted.[30] Some drives with an integrated encryption may require a TRIM command be sent to the drive to put the drive back to it original out-of-box state.[31]

ウェアレベリング[編集]

If a particular block were programmed and erased repeatedly without writing to any other blocks, the one block would wear out before all the other blocks, thereby prematurely ending the life of the SSD. For this reason, SSD controllers use a technique called wear leveling to distribute writes as evenly as possible across all the flash blocks in the SSD. In a perfect scenario, this would enable every block to be written to its maximum life so they all fail at the same time. Unfortunately, the process to evenly distribute writes requires data previously written and not changing (cold data) to be moved, so that data which are changing more frequently (hot data) can be written into those blocks. Each time data are relocated without being changed by the host system, this increases the write amplification and thus reduces the life of the flash memory. The key is to find an optimum algorithm which maximizes them both.[32]

静的データと動的データの分離[編集]

The separation of static and dynamic data to reduce write amplification is not a simple process for the SSD controller. The process requires the SSD controller to separate the LBAs with data which is constantly changing and requiring rewriting (dynamic data) from the LBAs with data which rarely changes and does not require any rewrites (static data). If the data is mixed in the same blocks, as with almost all systems today, any rewrites will require the SSD controller to garbage collect both the dynamic data (which caused the rewrite initially) and static data (which did not require any rewrite). Any garbage collection of data that would not have otherwise required moving will increase write amplification. Therefore separating the data will enable static data to stay at rest and if it never gets rewritten it will have the lowest possible write amplification for that data. The drawback to this process is that somehow the SSD controller must still find a way to wear level the static data because those blocks that never change will not get a chance to be written to their maximum P/E cycles.[1]

シーケンシャルライト[編集]

When an SSD is writing data sequentially, the write amplification is equal to one meaning there is no write amplification. The reason is as the data is written, the entire block is filled sequentially with data related to the same file. If the OS determines that file is to be replaced or deleted, the entire block can be marked as invalid, and there is no need to read parts of it to garbage collect and rewrite into another block. It will only need to be erased, which is much easier and faster than the read-erase-modify-write process needed for randomly written data going through garbage collection.[12]

ランダムライト[編集]

The peak random write performance on an SSD is driven by plenty of free blocks after the SSD is completely garbage collected, secure erased, 100% TRIMed, or newly installed. The maximum speed will depend upon the number of parallel flash channels connected to the SSD controller, the efficiency of the firmware, and the speed of the flash memory in writing to a page. During this phase the write amplification will be the best it can ever be for random writes and will be approaching one. Once the blocks are all written once, garbage collection will begin and the performance will be gated by the speed and efficiency of that process. Write amplification in this phase will increase to the highest levels the drive will experience.[12]

性能への影響[編集]

The overall performance of an SSD is dependent upon a number of factors, including write amplification. Writing to a flash memory device takes longer than reading from it.[16] An SSD generally uses multiple flash memory components connected in parallel to increase performance. If the SSD has a high write amplification, the controller will be required to write that many more times to the flash memory. This requires even more time to write the data from the host. An SSD with a low write amplification will not need to write as much data and can therefore be finished writing sooner than a drive with a high write amplification.[1][5]

製品における状況[編集]

In September 2008, Intel announced the X25-M SATA SSD with a reported WA as low as 1.1.[33][34] In April 2009, SandForce announced the SF-1000 SSD Processor family with a reported WA of 0.5 which appears to come from some form of data compression.[33][35] Before this announcement, a write amplification of 1.0 was considered the lowest that could be attained with an SSD.[16] Currently, only SandForce employs compression in its SSD controller.

出典[編集]

- ^ a b c d e f g h i j Hu, X.-Y. and E. Eleftheriou, R. Haas, I. Iliadis, R. Pletka (2009年). “[CiteSeerx: 10.1.1.154.8668 Write Amplification Analysis in Flash-Based Solid State Drives]”. IBM. 2010年6月2日閲覧。

- ^ a b c Smith, Kent (2009年8月17日). “Benchmarking SSDs: The Devil is in the Preconditioning Details”. SandForce. 2012年8月28日閲覧。

- ^ Ku, Andrew (2012年2月6日). “Intel SSD 520 Review: SandForce's Technology: Very Low Write Amplification”. Tomshardware. 2012年2月10日閲覧。

- ^ a b c Thatcher, Jonathan (2009年8月18日). “NAND Flash Solid State Storage Performance and Capability – an In-depth Look”. SNIA. 2012年8月28日閲覧。

- ^ a b Agrawal, N., V. Prabhakaran, T. Wobber, J. D. Davis, M. Manasse, R. Panigrahy (2008年6月). “[CiteSeerx: 10.1.1.141.1709 Design Tradeoffs for SSD Performance]”. Microsoft. 2010年6月2日閲覧。

- ^ Lucchesi, Ray (2008–09). “SSD Flash drives enter the enterprise”. Silverton Consulting. 2011年5月31日時点のオリジナルよりアーカイブ。2010年6月18日閲覧。

- ^ Case, Loyd (2008年9月8日). “Intel X25 80GB Solid-State Drive Review”. 2011年7月28日閲覧。

- ^ Kerekes, Zsolt. “Western Digital Solid State Storage - formerly SiliconSystems”. ACSL. 2010年6月19日閲覧。

- ^ a b c Kerekes, Zsolt. “Flash SSD Jargon Explained”. ACSL. 2010年5月31日閲覧。

- ^ a b c d e “SSDs - Write Amplification, TRIM and GC”. OCZ Technology. 2012年11月13日閲覧。

- ^ “Intel Solid State Drives”. Intel. 2010年5月31日閲覧。

- ^ a b c Hu, X.-Y. and R. Haas (2010年3月31日). “The Fundamental Limit of Flash Random Write Performance: Understanding, Analysis and Performance Modelling”. IBM Research, Zurich. 2010年6月19日閲覧。

- ^ a b https://pc.watch.impress.co.jp/docs/news/event/441051.html

- ^ “TN-29-17: NAND Flash Design and Use Considerations”. Micron (2006年). 2011年7月19日時点のオリジナルよりアーカイブ。2010年6月2日閲覧。

- ^ a b c d Mehling, Herman (2009年12月1日). “Solid State Drives Take Out the Garbage”. Enterprise Storage Forum. 2010年6月18日閲覧。

- ^ a b c Conley, Kevin (2010年5月27日). “Corsair Force Series SSDs: Putting a Damper on Write Amplification”. Corsair.com. 2010年6月18日閲覧。

- ^ Layton, Jeffrey B. (2009年10月27日). “Anatomy of SSDs”. Linux Magazine. 2010年6月19日閲覧。

- ^ Bell, Graeme B. (2010年). “Solid State Drives: The Beginning of the End for Current Practice in Digital Forensic Recovery?”. Journal of Digital Forensics, Security and Law. 2012年4月23日時点のオリジナルよりアーカイブ。2012年4月2日閲覧。

- ^ “SSDs are incompatible with GPT partitioning?!”. unknown閲覧。

- ^ a b c Bagley, Jim (2009年7月1日). “Over-provisioning: a winning strategy or a retreat?”. StorageStrategies Now. p. 2. 2010年1月4日時点のオリジナルよりアーカイブ。2010年6月19日閲覧。

- ^ a b Smith, Kent (2011年8月1日). “Understanding SSD Over-provisioning”. flashmemorysummit.com. p. 14. 2012年12月3日閲覧。

- ^ a b Christiansen, Neal (2009年9月14日). “ATA Trim/Delete Notification Support in Windows 7”. Storage Developer Conference, 2009. 2010年3月26日時点のオリジナルよりアーカイブ。2010年6月20日閲覧。

- ^ a b Shimpi, Anand Lal (2009年11月17日). “The SSD Improv: Intel & Indilinx get TRIM, Kingston Brings Intel Down to $115”. AnandTech.com. 2010年6月20日閲覧。

- ^ a b c d e f g Mehling, Herman (2010年1月27日). “Solid State Drives Get Faster with TRIM”. Enterprise Storage Forum. 2010年6月20日閲覧。

- ^ Shimpi, Anand Lal (2009年3月18日). “The SSD Anthology: Understanding SSDs and New Drives from OCZ”. AnandTech.com. p. 9. 2010年6月20日閲覧。

- ^ Shimpi, Anand Lal (2009年3月18日). “The SSD Anthology: Understanding SSDs and New Drives from OCZ”. AnandTech.com. p. 11. 2010年6月20日閲覧。

- ^ a b Malventano, Allyn (2009年2月13日). “Long-term performance analysis of Intel Mainstream SSDs”. PC Perspective. 2010年6月20日閲覧。

- ^ “CMRR - Secure Erase”. CMRR. 2010年6月10日時点のオリジナルよりアーカイブ。2010年6月21日閲覧。

- ^ “How to Secure Erase Your OCZ SSD Using a Bootable Linux CD”. OCZ Technology (2011年9月7日). 2012年2月10日閲覧。

- ^ “The Intel SSD 320 Review: 25nm G3 is Finally Here”. anandtech. 2011年6月29日閲覧。

- ^ “SSD Secure Erase - Ziele eines Secure Erase”. Thomas-Krenn.AG. 2011年9月28日閲覧。

- ^ Chang, Li-Pin (2007年3月11日). “[CiteSeerx: 10.1.1.103.4903 On Efficient Wear Leveling for Large Scale Flash Memory Storage Systems]”. National ChiaoTung University, HsinChu, Taiwan. 2010年5月31日閲覧。

- ^ a b Shimpi, Anand Lal (2009年12月31日). “OCZ's Vertex 2 Pro Preview: The Fastest MLC SSD We've Ever Tested”. AnandTech. 2011年6月16日閲覧。

- ^ “Intel Introduces Solid-State Drives for Notebook and Desktop Computers”. Intel (2008年9月8日). 2010年5月31日閲覧。

- ^ “SandForce SSD Processors Transform Mainstream Data Storage”. SandForce (2008年9月8日). 2010年5月31日閲覧。